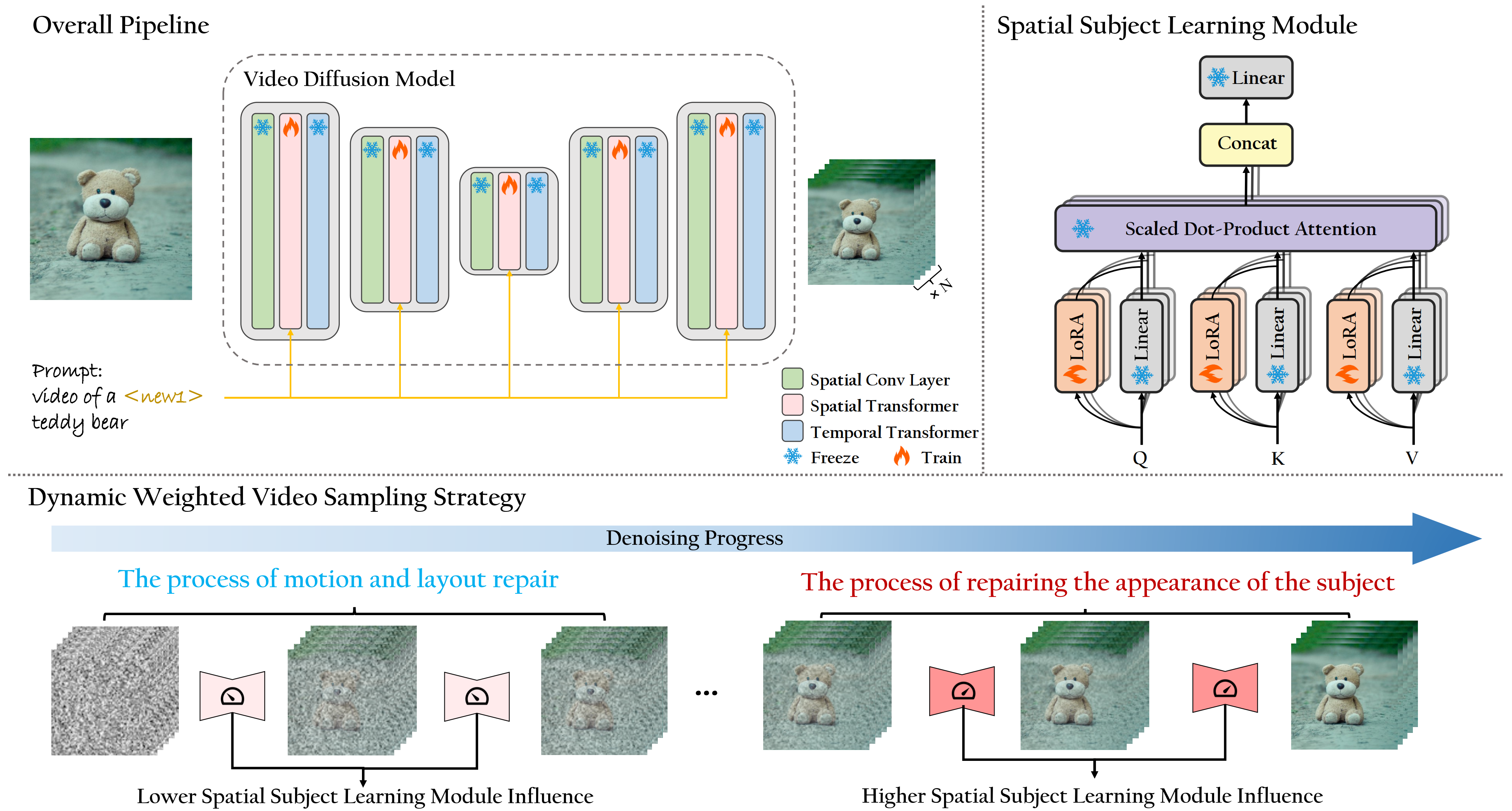

Customized video generation aims to generate high-quality videos guided by text prompts and subject's reference images. However, since it is only trained on static images, the fine-tuning process of subject learning disrupts abilities of video diffusion models (VDMs) to combine concepts and generate motions. To restore these abilities, some methods use additional video similar to the prompt to fine-tune or guide the model. This requires frequent changes of guiding videos and even re-tuning of the model when generating different motions, which is very inconvenient for users. In this paper, we propose CustomCrafter, a novel framework that preserves the model's motion generation and conceptual combination abilities without additional video and fine-tuning to recovery. For preserving conceptual combination ability, we design a plug-and-play module to update few parameters in VDMs, enhancing the model's ability to capture the appearance details and the ability of concept combinations for new subjects. For motion generation, we observed that VDMs tend to restore the motion of video in the early stage of denoising, while focusing on the recovery of subject details in the later stage. Therefore, we propose Dynamic Weighted Video Sampling Strategy. Using the pluggability of our subject learning modules, we reduce the impact of this module on motion generation in the early stage of denoising, preserving the ability to generate motion of VDMs. In the later stage of denoising, we restore this module to repair the appearance details of the specified subject, thereby ensuring the fidelity of the subject's appearance. Experimental results show that our method has a significant improvement compared to previous methods.

Overall review of CustomCrafter. For subject learning, we adopt LoRA to construct Spatial Subject Learning Module, which update the Query, Key, and Value parameters of attention layers in all Spatial Transformer models. In the process of generating videos, we divide the denoising process into two phases: the motion layout repair process and the subject appearance repair process. By reducing the influence of the Spatial Subject Learning Module in the motion layout repair process, and restoring it in the subject appearance repair process to repair the details of the subject.

Qualitative comparison of customized video generation with both subjects and motions.

Without guidance from additional videos, our method significantly outperforms in terms of concept combination.

[1] Nupur Kumari, Bingliang Zhang, Richard Zhang, Eli Shechtman, and Jun-Yan Zhu. Multi-concept customization of text-to-image diffusion CVPR, 2023.

[2] Yujie Wei, Shiwei Zhang, Zhiwu Qing, Hangjie Yuan, Zhiheng Liu, Yu Liu, Yingya Zhang, Jingren Zhou, and Hongming Shan. Dreamvideo: Composing your dream videos with customized subject and motion. CVPR, 2024.

@article{wu2024customcrafter,

title={CustomCrafter: Customized Video Generation with Preserving Motion and Concept Composition Abilities},

author={Wu, Tao and Zhang, Yong and Wang, Xintao and Zhou, Xianpan and Zheng, Guangcong and Qi, Zhongang and Shan, Ying and Li, Xi},

journal={arXiv preprint arXiv:2408.13239},

year={2024}

}